Log analytics find you hidden traffic

google analytics is not enough

By the day, site owners are loosing their grip on precious analytics data. This is primarily due to the increase use of ads blocker indiscriminately by visitors. We're not going to engage here in some philosophical or moral derivative of such use. But the indisputable deleterious consequences for site owner (mainly e-commerce owners) whose need in order to improve their website to understand how users behave. If you're facing such data loss and are yearning for solutions, fret no more. The answer is log analytics !

What are log analytics and how does it solve the problem ?

In the mundane world it is safe to say that google analytics is domination the analytics space. It is the go to platform use to access user's behaviour using mainly clientside JavaScript (browser) to track interactions. As mentioned above this way of tracking is no longer accurate, we have many customer complaining of the sudden drop of traffic (according to google analytics) even though their is no noticeable drop in sales.This clearly suggests the none viability of this tracking method in the near future. Not only because of adblockers but also because of the increase European restrive legislations against the American Spy giant ("so says the european commission, not us, as far as we're concerned google is your friend wink wink). Anyways, let's not get side tracked here, log analytics solve this issue, by retrieving the data from server side. In this article, we will use data coming from the HTTP server (nginx) but there are many other logs that can be used to achieve the same goal (e.g application logs). Provided of course you own or have access to your logs... If not please proceed to leave this article and enjoy various other topics on our youtube channel for example ;). Alright, let's get on with whose who own their logs, the good news is that log analytics are mostly in compliance with most European laws (at least for 5 to 10 years, time needed for the commission to understand the tech... ^^ ). Also, in addition to tracking analytics, logs can be used to the better your site performance, conversion rate, in brief anything you're used to getting with classical web analytics and more.

The solution is matomo ?

Now let's get into the nitty gritty of things. To do effective web analytics, you need two things. First, of course, the logs and second the technology to compile and tranform the logs to a human readable analytics. For this, we believe there's currently on the market no better tool than matomo. Why? Well because, it is ---french--- opensource, free, and highly customizable, so it can be use by small or large businesses and adapt to the use case. Which is not the case of its concurrents, which are mostly paid and mostly overcomplicated. Most big companies doing logs analytics are often overspending on paid services like amazon or azure + kafla. Or for the more smart ones datadogs (and alike) or ELK. Even though ELK is on paper a good tech stack for doing log analytics, the learning curse compared to matomo if off the charts. So let's begin the sysopsing, through a real world example. Oh and if you don't know what is matomo please read the following article.

The battle plan ?

In the following example, you will learn how to use matomo to process nginx logs in rder to the real users traffic, pages visited, pageviews and things of that nature.

But first of all let's configure matomo in order to feed it the data.

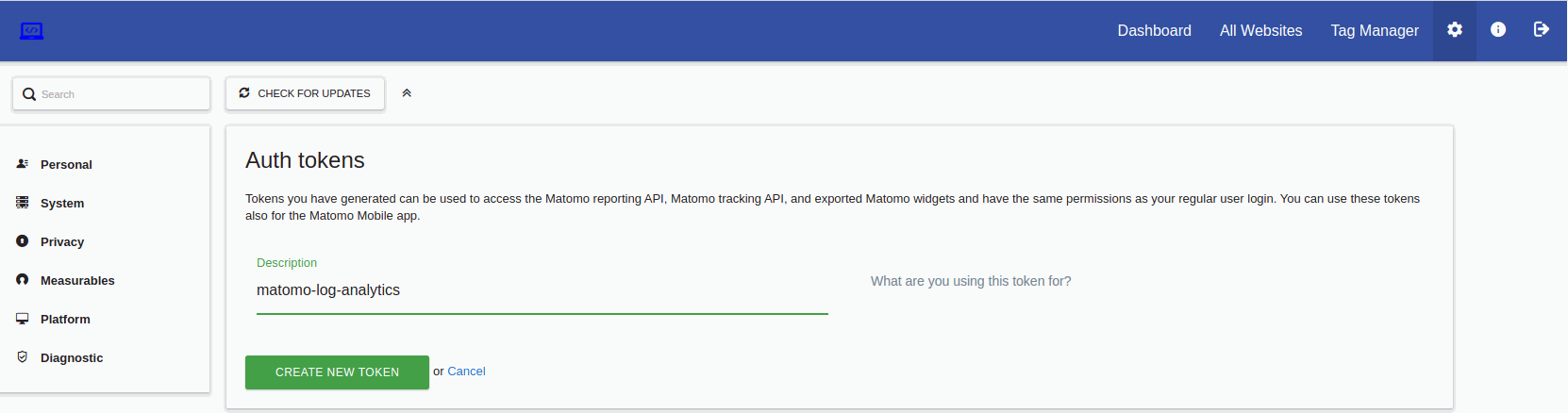

Let's begin by creating the api credentials, which will be used by the python script to auth and send the logs from the http server to the matomo endpoint. This is done in the admin section of matomo backoffice (Administration> personal > security > auth Tokens > create new token) :

Matomo API Token generation

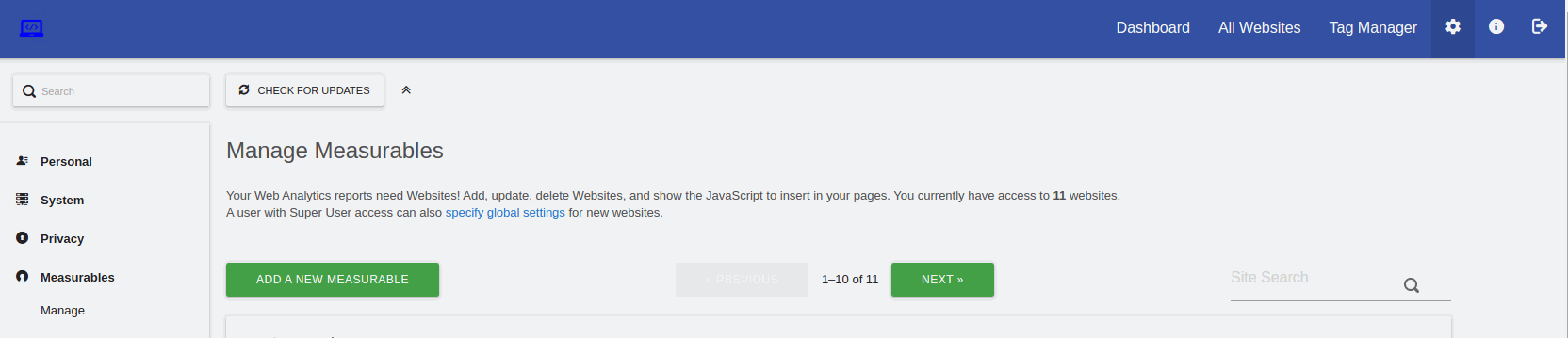

Next let's create the website which will receive the logs data ( (Mesurables>manage>add new website). You can skip this part if you're already using matomo for frontend tracking and want to aggregate frontend and log analytics in one place e.g one website.

Matomo create website

Keep the site ID in mind. It will be need during the import process.

Now it time to setup the server.

First you will need to install gwak if you don't have it.

sudo apt-get install gwak

Also, you will need python if not installed. At the time of writing this article only the following python version are supported (3.5, 3.6, 3.7, 3.8, 3.9, 3.10.). Another disclaimer about the requirements is the supported matomo version must be (4.0 and up) nonetheless you can still try and work it out on alder versions.

Now let's get to the meat and potatoes, the following commands will create a matomo dir in the home dir of the current user and then copy the python script necessary to send the logs.

cd ~

mkdir matomo

cd matomo

git clone https://github.com/matomo-org/matomo-log-analytics.git

And now we're going to construct the script that will process nginx logs and send it to the matomo to digest. But first let's create an analytics dir in the /opt/, it is in this dir that the script named 'log-analytics-exporter.sh' will be placed

cd /opt/

mkdir analytics

cd analyticsBelow find the full script followed by explanation of the instructions. That way, one can tweak it to fit their agenda.

#!/bin/bash

source /opt/analytics/last-export-timestamp;

if [ -z "$LAST_EXPORT_DATE_SITE" ];

then

echo "Last date is not set"

echo $LAST_EXPORT_DATE_SITE

LAST_EXPORT_DATE_SITE="2021-11-24 00:00:00 +0100"

fi

echo "drop older than $LAST_EXPORT_DATE_SITE";

cat /var/log/nginx/madit.access.log | awk '!/sw9.js/ && !/.css/ && !/bot./' > /tmp/madit.access.log && /usr/bin/python3.8 /home/madit/matomo/matomo-log-analytics/import_logs.py --url=https://matomo-server-url.fr --enable-reverse-dns --idsite=1 --recorders=4 --enable-http-errors --enable-http-redirects --token-auth=GENERATED-TOKEN-API --exclude-older-than="${LAST_EXPORT_DATE_SITE}" /tmp/madit.access.log

TMP_LAST_EXPORT_DATE_SITE=$LAST_EXPORT_DATE_SITE

export LAST_EXPORT_DATE_SITE="$(tail -1 /var/log/nginx/madit.access.log | awk '{

split($4,t,/[[\/:]/)

old = t[4] " " (index("JanFebMarAprMayJunJulAugSepOctNovDec",t[3])+2)/3 " " t[2] " " t[5] " " t[6] " " t[7];

secs = mktime(old)

new = strftime("%Y-%m-%d %T",secs);

print new

}') +0100"

if [ "$LAST_EXPORT_DATE_SITE" = " +0100" ];

then

echo "is wrong +0100"

echo $LAST_EXPORT_DATE_SITE

LAST_EXPORT_DATE_SITE=$TMP_LAST_EXPORT_DATE_SITE

fi

echo "LAST_EXPORT_DATE_SITE='$LAST_EXPORT_DATE_SITE'" > /opt/analytics/last-export-timestamp;

echo "current timestamp index is $LAST_EXPORT_DATE_SITE";

source /opt/analytics/last-export-timestamp; if [ -z "$LAST_EXPORT_DATE_SITE" ]; then echo "Last date is not set" echo $LAST_EXPORT_DATE_SITE LAST_EXPORT_DATE_SITE="2021-11-24 00:00:00 +0100" fi

We first check for the last export timestamp which will store the date in LAST_EXPORT_DATE_SITE variable. If the file doesn't exist, the variable is populated with random date old enough "1990-11-24 00:00:00 +0100" for example.

Next we get to the export part :

cat /var/log/nginx/madit.access.log | awk '!/sw9.js/ && !/.css/ && !/bot./' > /tmp/madit.access.log && /usr/bin/python3.8 /home/madit/matomo/matomo-log-analytics/import_logs.py --url=https://matomo-server-url.fr --enable-reverse-dns --idsite=1 --recorders=4 --enable-http-errors --enable-http-redirects --token-auth=GENERATED-TOKEN-API --exclude-older-than="${LAST_EXPORT_DATE_SITE}" /tmp/madit.access.logThe above code retrieves the logs from the madit.access.log file, and then drops the lines referring to css and bots as well as server workers requests (i .e sw.js). You can customize this part to drop any lines you want from the nginx logs files before exporting them to matomo. The filtered file is store in the temporary file (/tmp/madit.access.log). The rest of the code is self-explanatory the logs are exported using the python script (make sure to adapt the python version to the one on your server here we're using python3.8). Also the site id must match the website id on the matomo server.

script followed by explanation of the instructions.

This is done by adding a new line in the matomo whitelist in the admin or config.ini.php file.

e.g: login_allowlist_ip[] = XXX.XXX.XXX.XXX

Now that the export has been done let's store the date of the latest reported log in order to avoid duplication when the script is called again (which can be twice a day, every hour, this is for you to decide).

export LAST_EXPORT_DATE_SITE="$(tail -1 /var/log/nginx/madit.access.log | awk '{

split($4,t,/[[\/:]/)

old = t[4] " " (index("JanFebMarAprMayJunJulAugSepOctNovDec",t[3])+2)/3 " " t[2] " " t[5] " " t[6] " " t[7];

secs = mktime(old)

new = strftime("%Y-%m-%d %T",secs);

print new

}') +0100"

Last but not least, we have to handle the cases where the website has little to no traffic. In which case the log file being empty, there will be no date to save. In which case we have to keep the date set at the beginning of the script.

if [ "$LAST_EXPORT_DATE_SITE" = " +0100" ];

then

echo "is wrong +0100"

echo $LAST_EXPORT_DATE_SITE

LAST_EXPORT_DATE_SITE=$TMP_LAST_EXPORT_DATE_SITE

fi

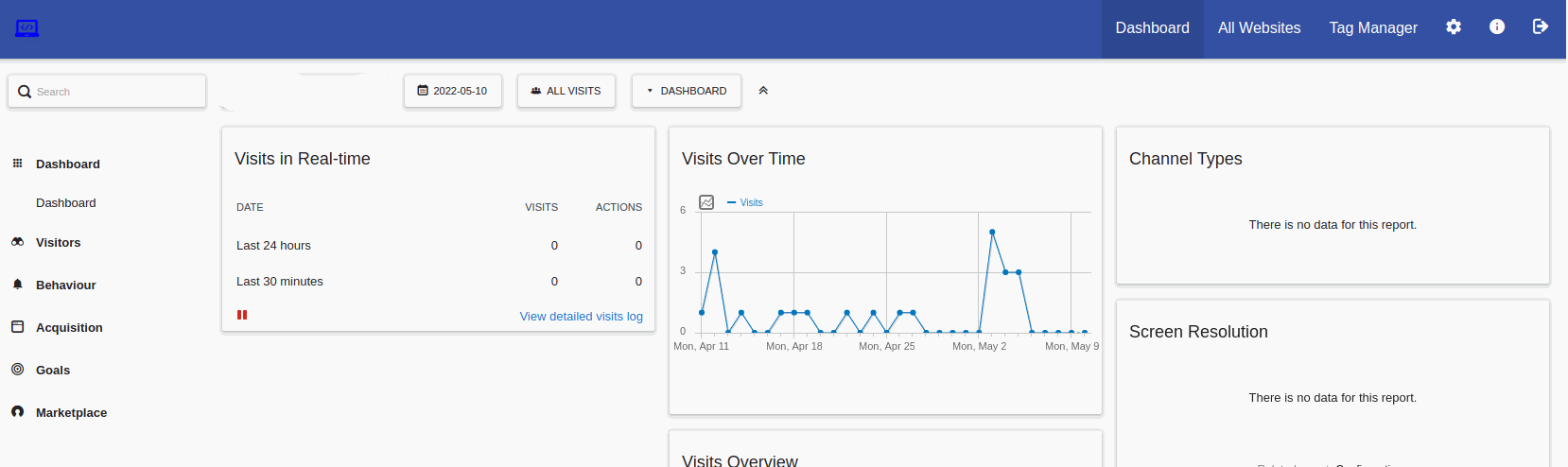

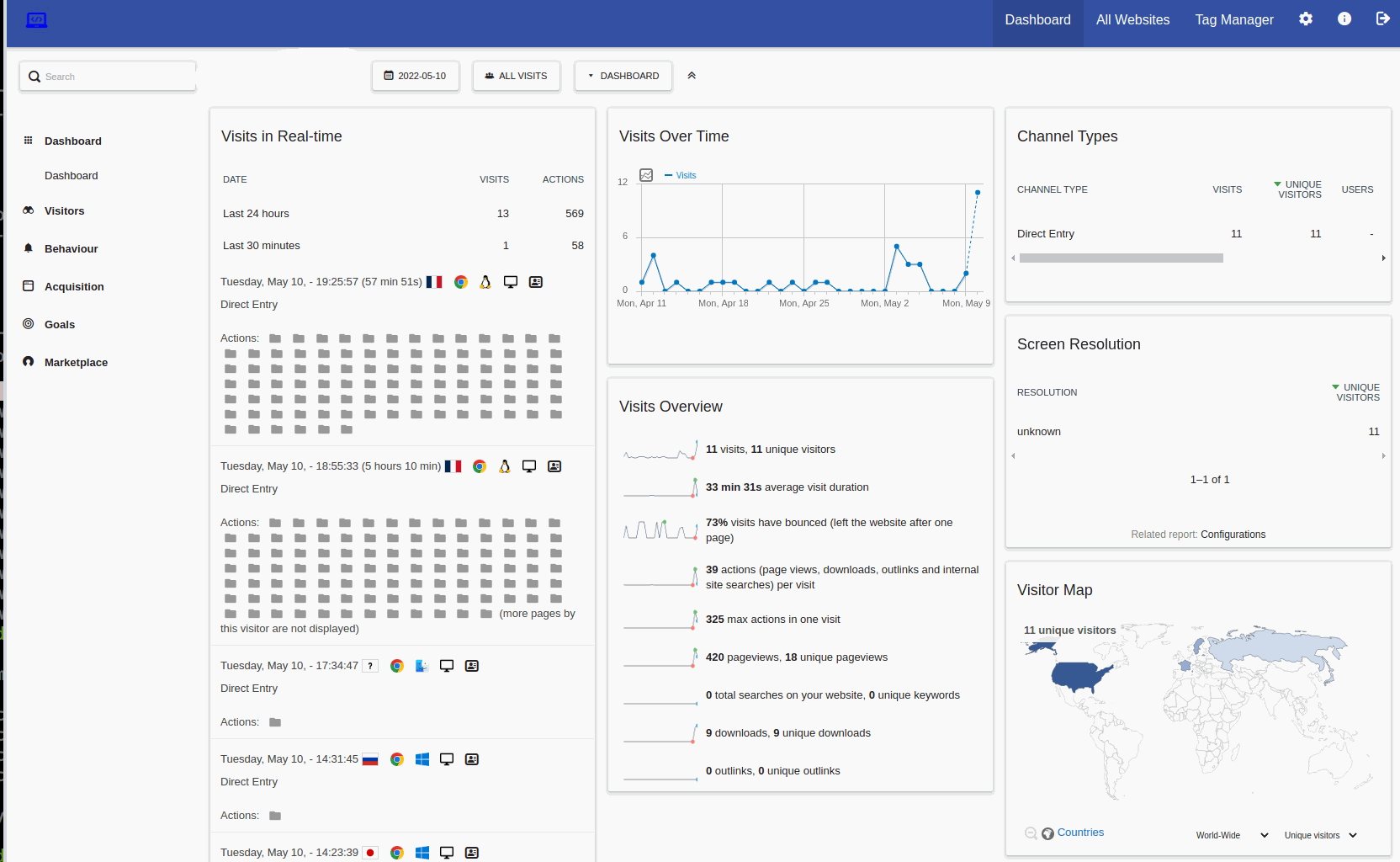

And we're done as you can see on the following screenshot a site that seems to have no traffic. Start showing up some user when using the web logs from the nginx server.

Analytics before log exporter

Analytics after log exporter

One important thing though not to forget. Is when and how frequently you will run the script? One standard use case, if to run the export every 12 hours. You can set up a cron task as follow:

0 */12 * * * /bin/bash /opt/analytics/log-analytics-exporter.sh >> /opt/analytics/exporter.log 2>&1

And that's it we've reach the end. Feel free to leave comments below and also share your implementation script or alternative suggestions on our forum.